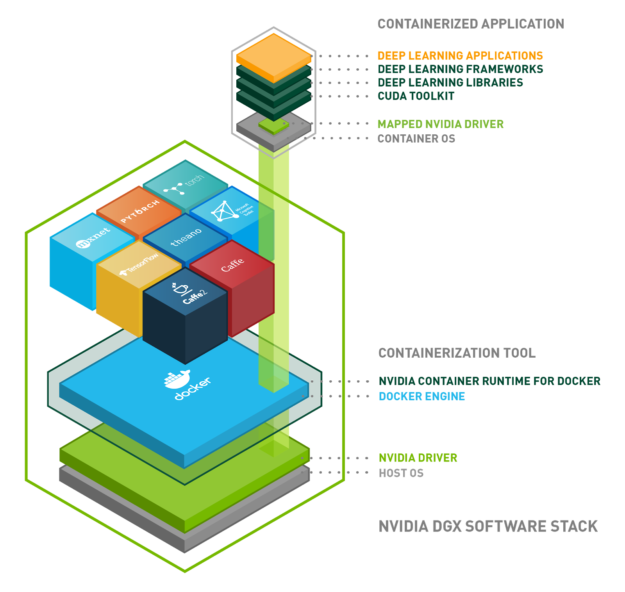

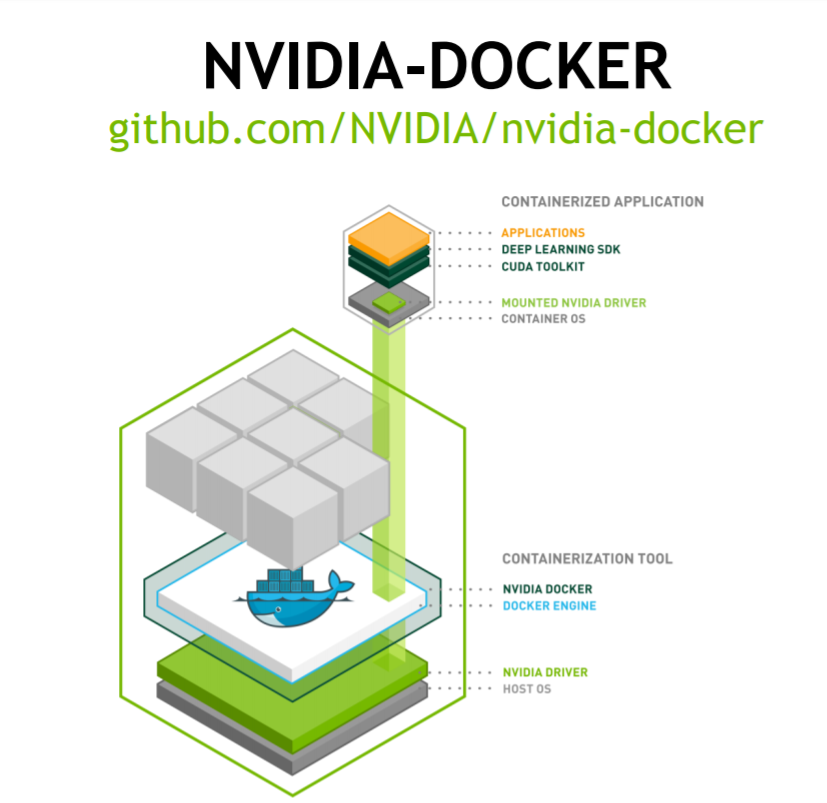

Accelerated model training and AI assisted annotation of medical images with the NVIDIA Clara Train application development framework on AWS | Containers

GPU_Acceleration_Using_CUDA_C_CPP/README.md at master · ashokyannam/GPU_Acceleration_Using_CUDA_C_CPP · GitHub

GitHub - NVIDIA/fsi-samples: A collection of open-source GPU accelerated Python tools and examples for quantitative analyst tasks and leverages RAPIDS AI project, Numba, cuDF, and Dask.

How to integrate NVIDIA DeepStream on Jetson Modules with AWS IoT Core and AWS IoT Greengrass | The Internet of Things on AWS – Official Blog

GitHub - arunkumar-singh/GPU-Multi-Agent-Traj-Opt: Repository associated with the paper "GPU Accelerated Convex Approximations for Fast Multi-Agent TrajectoryOptimization". Source codes will be uplaoded here soon.

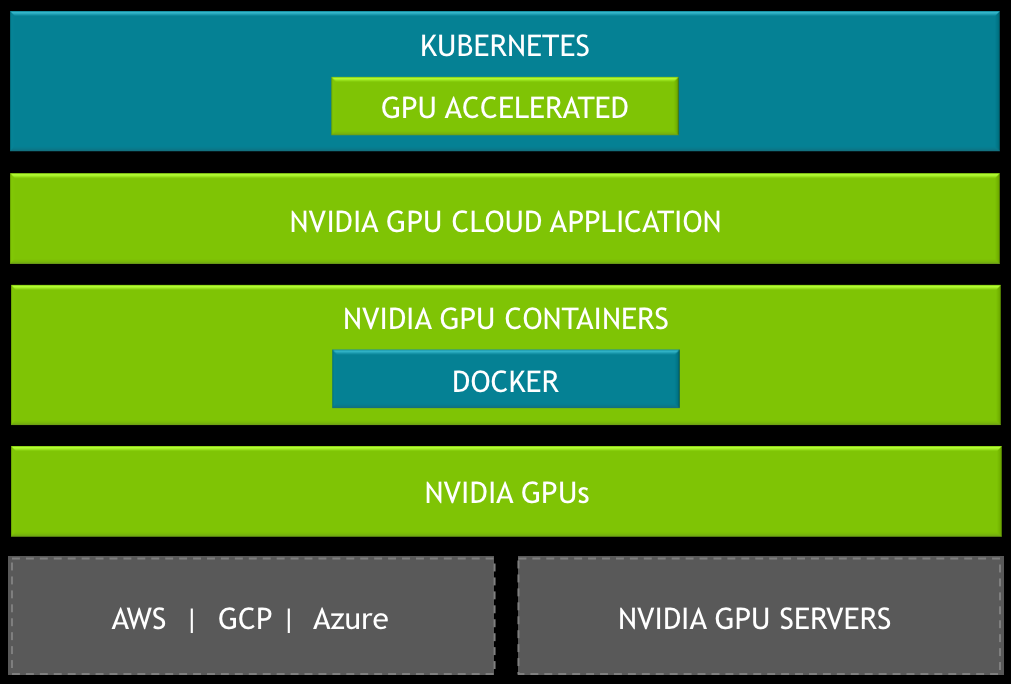

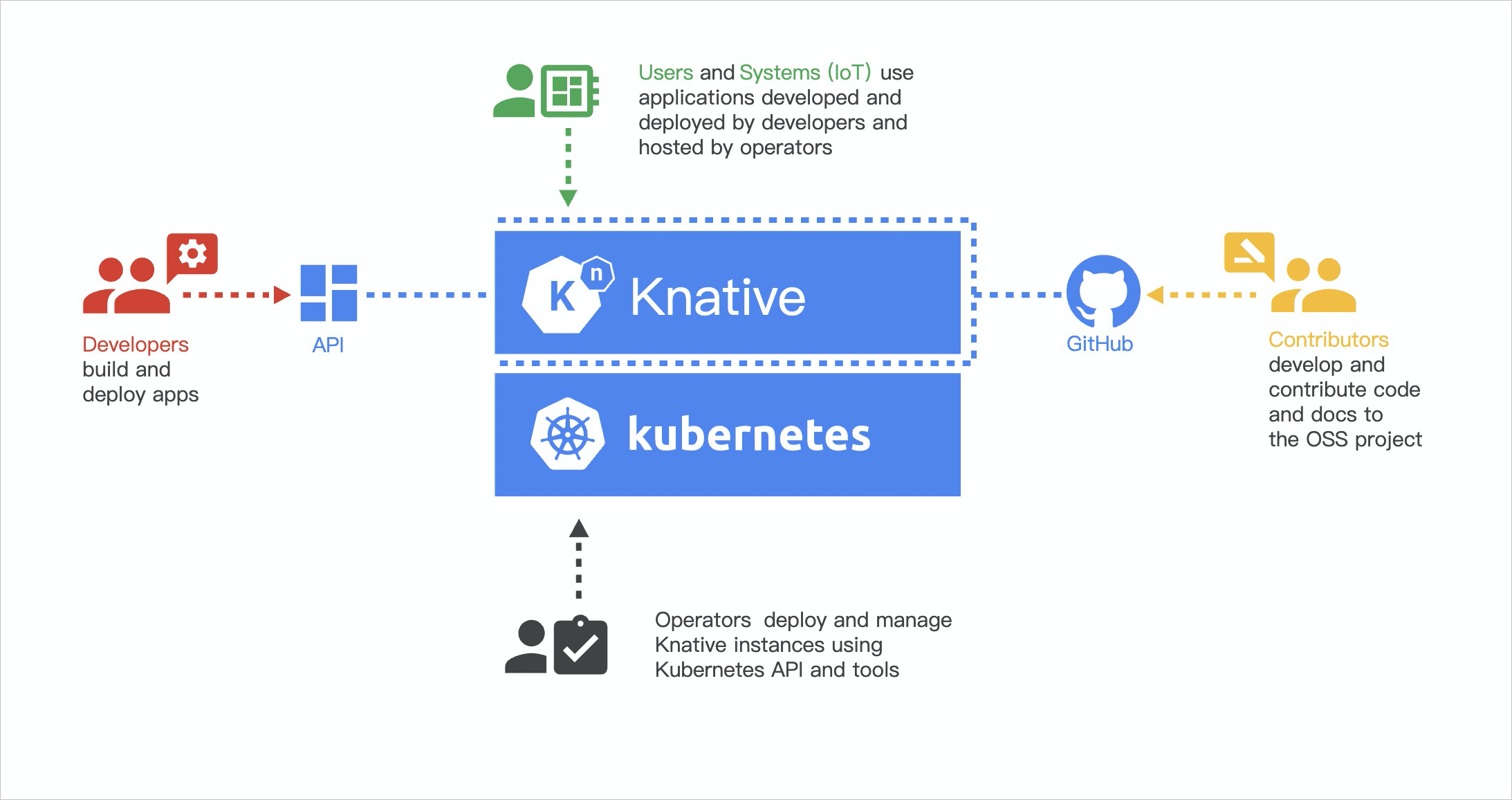

GitHub - src-d/k8s-nvidia-gpu-overcommit: Collection of tools and examples for managing Accelerated workloads in Kubernetes Engine